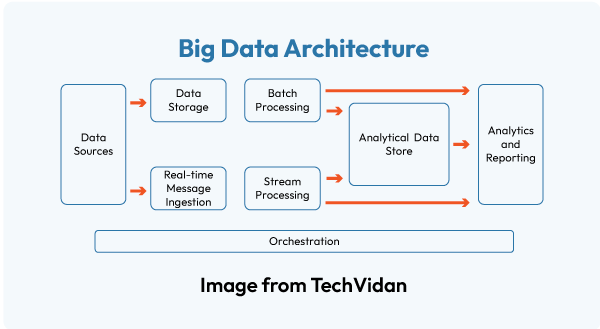

Data Warehouse, also known as enterprise data warehouse, is considered as one of the core elements of BI (Business Intelligence). Data warehouse is a system or means for reporting and data analysis and also supports the decision-making process. The process of planning, constructing, and maintaining a data warehouse system is called data warehousing.

Now, to have an in-depth knowledge of what ‘cloud data warehousing’ is all about, you need to first know and understand the most important aspects and practical details of the concept. Listed down are the terminology that you must know to be a master of cloud data warehouse system. Enjoy diving in-

A

Ad-Hoc Query: A query or a command which is created for specific purpose when issue remains unresolved with predefined datasets. Contrary to a predefined query, an ad-hoc query gives different results depending upon the variable. The output value cannot be predefined. It is created dynamically based on user’s demand.

Aggregation: Facts aggregated from a raw level to higher levels in different dimensions in order to mine business–related data or service from it with faster approach. For selected dimensions, facts are summed up from the original fact table. This speeds up the query performance. The aggregated facts or summaries are done over a specific period.

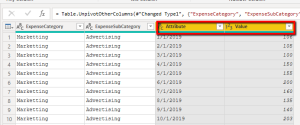

Attribute: It refers to any particular column or distinct type of data in a dimension table.

Attribute Hierarchy: There are 3 different levels of hierarchy of Attribute members: Leaf level (different attributes), Intermediate level (Parent-child hierarchy) and the Optional level (sum of the value of the attribute hierarchy members).

Application Gap: A recorded difference between some parts of a business prerequisite and application system’s ability to meet the necessary requirements.

Automatic Encryption: The automatic encryption of data to keep it unaffected from the external influence. Data encryption helps translate data into readable text or code. This allows the users who have access to secret key to read, use and analyze it.

B

Backup and recovery strategy: A strategy that prevents loss of important business data from the enterprise hardware or software due to any technical or natural faults.

Baseline: A baseline is a point that signifies deliverability of any project. It is a milestone or a point that can calculate what changes are to be made in the project.

Business Metadata: The data of knowledge composed for the users, which helps them understand the data warehouse. It concentrates on what data warehouse consists of, data source, data relevance, etc…

Business Intelligence: The objective of Business Intelligence is to provide data related to business operations which helps in making right decision at the right moment.

Backend Tool: It is a software that helps in the extraction process, typically resident on both the client and the server, that assists in the production data extract process.

C

Conformed Dimension: It is a dimension that has the same meaning when being referred from different fact tables. Conformed dimensions allow facts and measures to be categorized and described in the same way across multiple facts and/or data marts, ensuring consistent reporting across the enterprise.

Case tools: A complete set of application development tools and CASE (Computer-Aided Systems Engineering) that help in the development of software.

Category: An architecture for managing, indexing, and representing a dimension of a multidimensional cube.

Central Repository: A place where a set of documentation are saved, adapted, customized, reformed, or enhancements designed to alleviate the reformation of accomplished work.

Client/Server: A kind of technical structural design that links many workstations or PCs (Personal Computers) to one or more servers. Generally, Client manages the UI, probably with some local data.

Cluster: A platform or a channel of saving a set of data from multiple tables, when the data in those tables holds similar information accessed concurrently.

Column: A process of implementing a part of data (sort by date, character, format) within a table. It can be optional or mandatory.

Catalog: A module of a data dictionary that explains and manages number of aspects of a database (say folders, dimensions, functions, queries, etc…)

Cross Tab: A kind of multi-dimensional report that exhibits values or measures in cells created by the intersection of two or more dimensions in a table format.

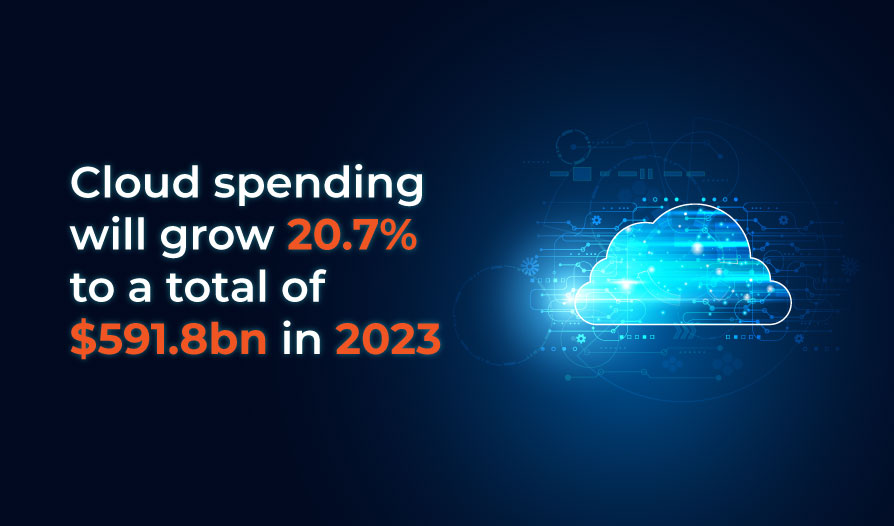

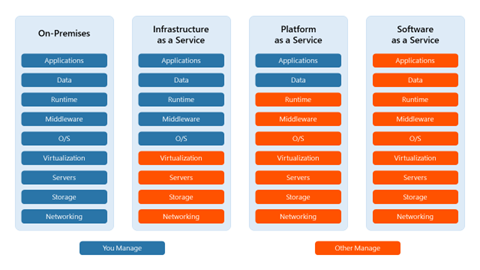

Cloud computing: Cloud Computing mainly refers to the provision of IT resources by a provider via the Internet (fixed and mobile).

Cube: It is a multi-dimensional data matrix that has several dimensions of independent variables and measures (dependent variables) that are developed by an OLAP (Online Analytical Processing System). With multiple levels, each dimension is organized into a hierarchy.

Cloud Analytics: Cloud analytics is a service model in which sub-elements of the business intelligence and data analytics process are provided through a public or private cloud. Cloud analytics applications and services can be available as a subscription-based or utility (pay-per-use) pricing model

D

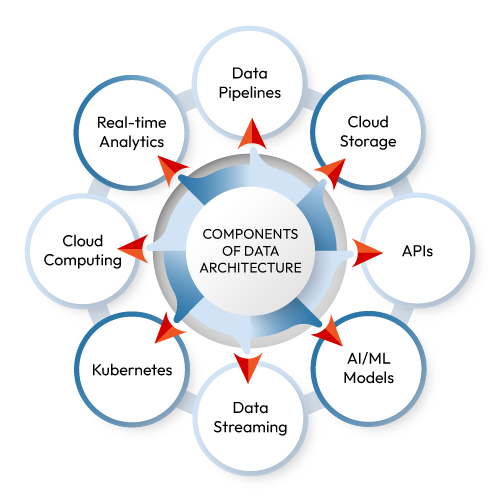

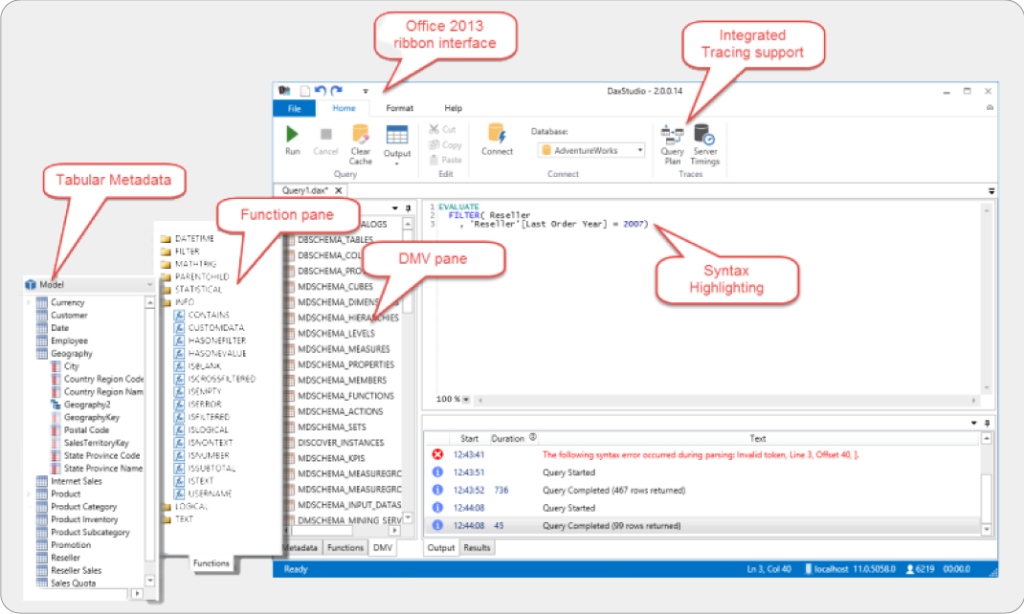

Data Analytics: Data analytics is the process of querying and interrogating data in the pursuit of valuable insights and information.

Data link: Created by UCSD’s Data Warehouse team, Data Link is a web-based tool. It gives a sheer knowledge of the data, data history, database, tables, available SQL queries and fields used in DARWIN.

Data Mining: The practice of identifying the relationship and pattern of set of data that involves various techniques.

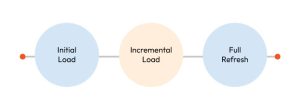

Data Refresh: The process by which all or part of the data in the warehouse is replaced.

Data Synchronization: Keeping data in the warehouse synchronized with source data.

Data Mart: Data marts contain a subset of organization-wide data that is valuable to specific groups of people in an organization.

Dimension: Information and data of same type. For example- Time Dimension type will contain information of year, month, day, and week.

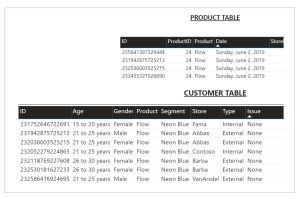

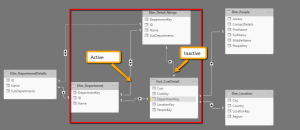

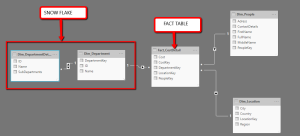

Dimensional Model: A type of data modeling suited for data warehousing. In a dimensional model, there are two types of tables: dimensional tables and fact tables. Dimensional table records information on each dimension, and fact table records all the “fact”, or measures.

Drill Across: Data analysis across dimensions.

Drill Down: Data analysis to child attribute used to zoom in to more detailed data by changing dimensions

Drill Through: Data analysis that goes from an OLAP cube into the relational database.

Drill Up: Data analysis to a parent attribute.

Data Map: A technique for creating a balance or data elements mapping between 2 different data models. For wide variety of data integration works, data mapping is used as the first step. Data mapping is used to create a match between data sources and target database element.

Data Lake: Data lake is storage of massive amount of data in its native format until the rise of its requirement. It uses flat architecture for storage.

Data Protection: It is a process of safeguarding important information from corruption and/or loss.

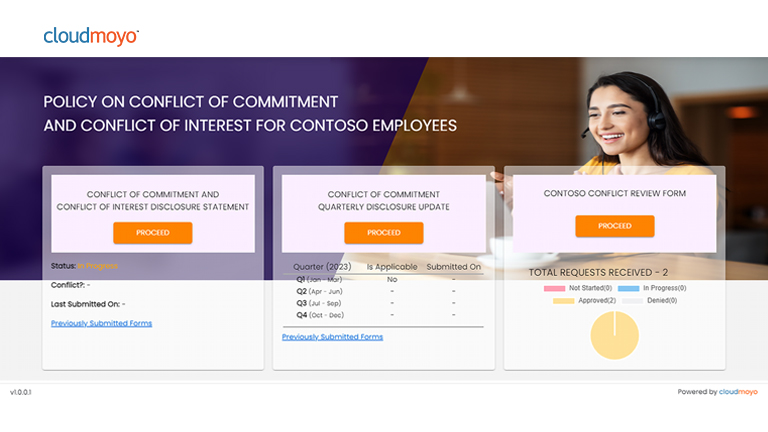

Decision Support System: A software system used to support decision-making processes within an organization.

Data quality: Quality that determines the reliability of data. High-quality data needs to be complete, accurate, available and timely.

Data cube: A data cube helps us represent data in multiple dimensions. It is defined by dimensions and facts. The dimensions are the entities with respect to which an enterprise preserves the records.

Data cleansing: With the help of data cleansing it is possible to remove and to correct data errors within a database or other information systems. Examples for such procedures are the erasing of data duplicates or the compression of similar information.

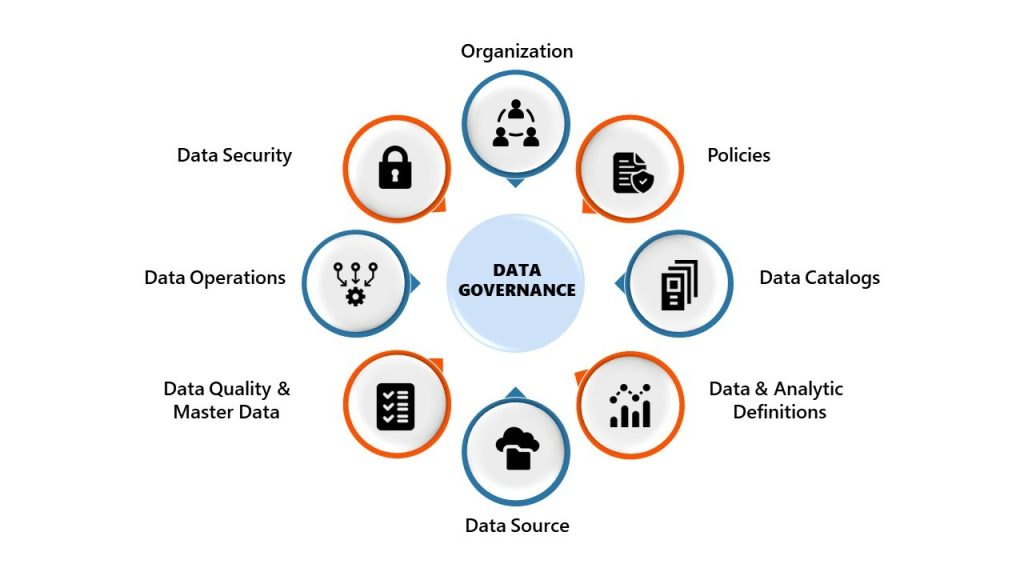

Data Governance: A structured and standard process of maintenance of data and transformation of data into valuable, practical and functional information.

Data Mashing: A process of reconsolidation and merging the new data with the already existing content.

Data migration: A process of transferring data between storage devices or computer systems – preferably without disrupting active applications. This process is usually achieved programmatically with database queries, developing custom software or with external migration tools.

Data Vault: A method that enables a process and approach to modeling your enterprise data warehouse.

Denormalize: Denormalize means to allow redundancy in a table so that the table can remain flat.

Degenerate Dimensions: A dimension key with no attributes (or actual dimension table), such as an invoice number etc.

Data Dictionary: A part of a database that carries meaning of data objects.

Data Extraction: The process of pulling data from operational and external data sources in order to prepare the source data for the data warehouse environment.

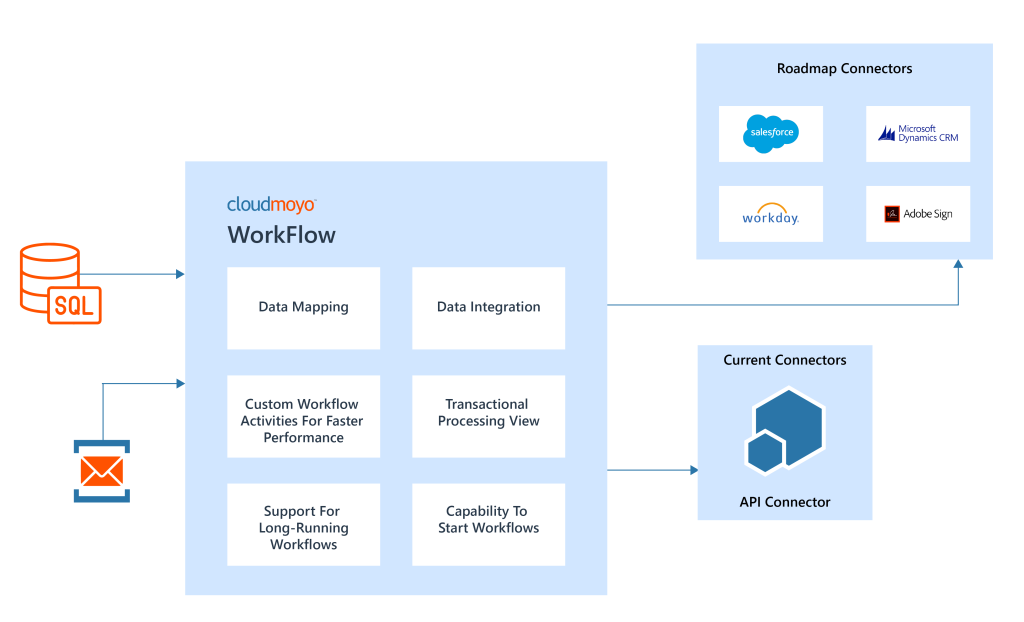

Data Integration: The movement of data between two co-existing systems. The interfacing of this data may occur once every hour or a day, etc.

Data Integrity: The data quality that rests in the database objects. Criteria that users verify when analyzing the data reliability and data value.

Data Replication: The process of creating a replication or copy of data to/from the sites to enhance the local service response times and availability.

Datastore: A temporary or permanent storage concept for logical data items used by specified business functions and processes.

Data Scrubbing: The process of manipulating or cleaning data into a standard format. This process may be done in conjunction with other data acquisition tasks.

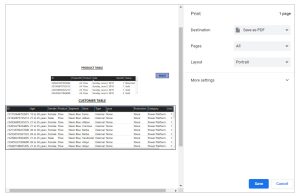

Data Source: It’s accessed during the process of data acquisition. An external system or an operational system or third-party system that enables data to gather the information required by the uses.

Dimension Table: A table that contains discrete values in a spreadsheet.

Distributed Database: A physically located database on multiple computer processors is called as distributed database. It is linked through some means of communications network. An essential feature of a true distributed database is that users or programs work as if they had access to the whole database locally.

E

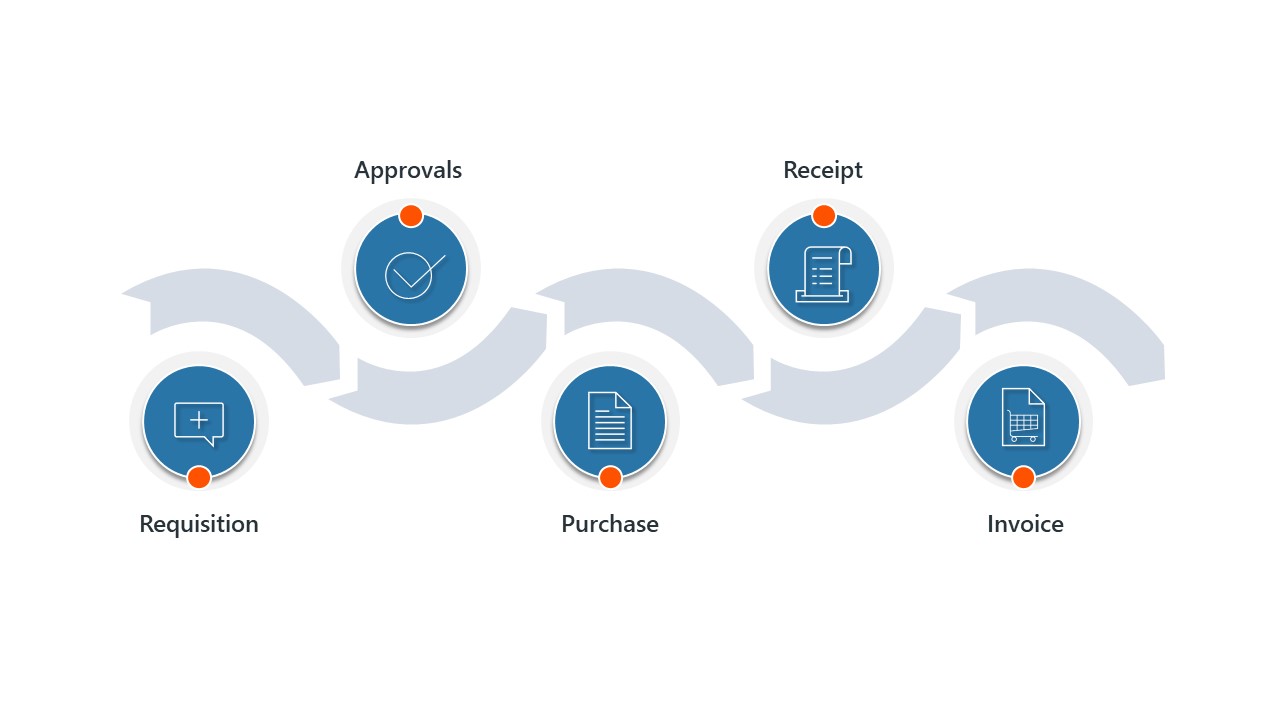

Enterprise Resource Planning: Enterprise resource planning (ERP) is a system that integrates and manages internal and external information in a organization. A ERP-System is used for a company to maintain and use data in a flow for the organization to use the advantage of being connected to vendors, customers etc

Executive Information System: A crisp collection of a high-level, customized graphical view of the enterprise data enabling management to scan/view the overall status of the business.

Entity Relationship Model: A part of the data model of business that comprises multiple Entity Relationship Diagrams.

External Data Source: An external data source of the data files/folder or system that is catered to the client.

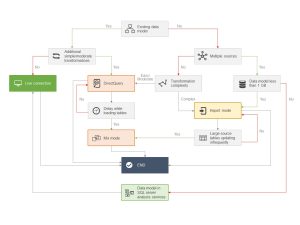

Extraction, Transformation and Loading (ETL) Tool: ETL Tool is a software that is used to extract data from a data source like a operational system or data warehouse, modify the data and then load it into a data mart, data warehouse or multi-dimensional data cube.

F

Fact Table: Structured by a composite key, each of whose objects is a foreign key extracted from a dimension table, a fact table is the central part in a star join schema.

Forecasting: Forecasting is a prediction of the actual business condition presented with statistical methods.

Foreign Key: A foreign key is a column or a set of columns in a table whose values correspond to the values of the primary key in another table. In order to add a row with a given foreign key value, there must exist a row in the related table with the same primary key value.

Field: A means of implementing an item of data within a file. It can be in character, date, number, or other format and be optional or mandatory.

File Transfer Protocol (FTP): The physical movement of data files between applications, often across sites.

Format: The type of data that an attribute or column may represent; for example, character, date, number, sound, or image.

G

Granularity: The level of detail of your data within the data structure.

H

Hierarchy: The logical structure tree or managing data according to its level. The individual level of the hierarchy is further denoted as categories. The individual elements within a level are referred to as categories

Hybrid OLAP: Also known as HOLAP is the combination of the technologies called ROLAP (Relational OLAP) and MOLAP (Multidimensional OLAP). It allows storing part of the data in a multidimensional database and another part of the data in a relational database and allows using the advantages of both technologies.

I

Indexing: An index is a link between one table and another for rapid access to the rows of a table based on the values of one or more columns in another table.

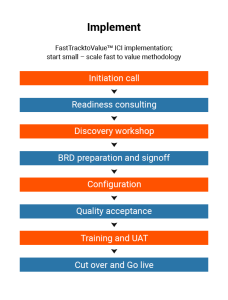

Implementation: The installation of an increment of the data warehouse solution that is complete, tested, operational, and ready. An implementation includes all necessary software, hardware, documentation, and all required data.

Information Access Model: Information Flow Model is a model that visually depicts information flows in the business between business functions, business organizations and applications.

J

Junk Dimensions: Attributes which are not a part of any current dimension tables or a fact table.

JSON: It is a semi-structured data format that can be used in multiple apps, but has become more common as a format for data transmission between servers and web applications or web connected devices.

L

Legacy System: A current repository system of data and processes.

Link Test: A test to discover errors in linked modules of an app system.

Logical Data Warehouse Architecture: It is a framework which sketches the complete functions and elements of a strategic warehouse. This includes warehouse management, ETL components, metadata repository, data classes, relational and multidimensional databases, etc.

M

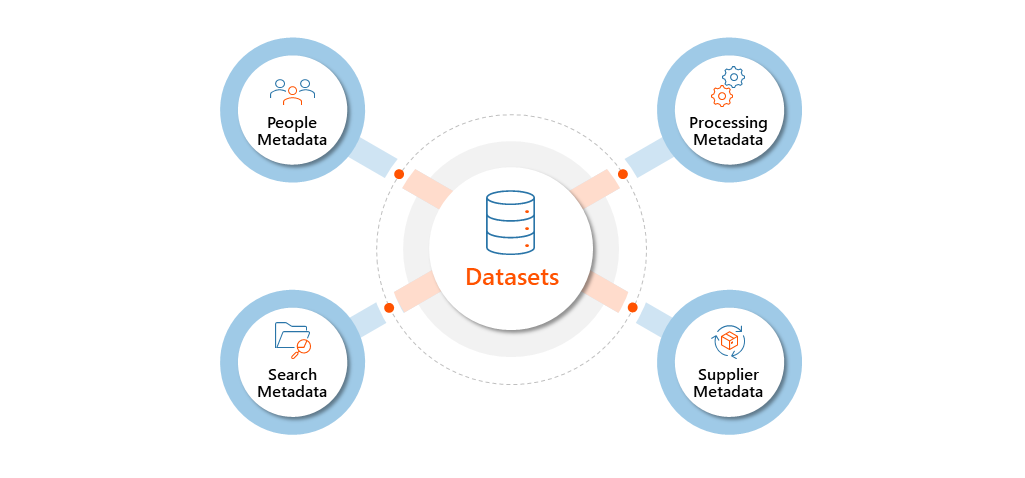

Metadata: Information/description about the particular data.

Metric: A measured value. For example, total sales is a metric.

MPP: The acronym MPP or Massively Parallel Processing is the synchronized processing of a particular program by number of processors that operate on multiple parts of the program, every processor using its individual operating system and memory.

Middleware: A system that makes it easier for the software to exchange data between end users and databases.

Mission Critical: A system that if it fails effects the viability of the company

MOLAP: Multidimensional OLAP system that stores data in the multidimensional cubes.

N

Natural Hierarchy: In general, a hierarchy is a collection of levels based on attributes. With that said, there are existing natural hierarchies, like country, state and city as well as year, month, week, and day also known as time hierarchy. These two examples represent a natural relationship related to attributes. This type of hierarchy has only one parent and also indicates the member attribute above it. This gives the idea to develop more user-defined-hierarchy i.e. more individual hierarchies.

Normalization A technique to eliminate data redundancy.

Non-Volatile Data: Data that is static or that does not change. In transaction processing systems, the data is updated on a regular basis.

O

OLAP: Online Analytical Processing (OLAP) is an online data recovery approach and its analysis to disclose the current trends and statistics of the business, which is not directly visible in the data that is retrieved from a data warehouse directly. This process is also known as multidimensional analysis.

Operational Datastore (ODS): A database designed to integrate information from different sources for add-on data operations for reporting and operational decision support.

Operational Data Source: The current operational system, which encompasses the data source for the ETL process (Extracted, Transform and load to the data warehouse database objects.)

P

Primary Index: An index used to improve performance on the combination of columns most frequently used to access rows in a table.

Primary Key: A set of one or more columns in a database table whose values, in combination, are required to be unique within the table.

Problem Report: The mechanism by which a problem is recorded, investigated, resolved and verified.

Parallel query: A process by which a query is broken into multiple subsets to speed execution

Partition: The process by which a large table or index is split into multiple extents on multiple storage areas to speed processing.

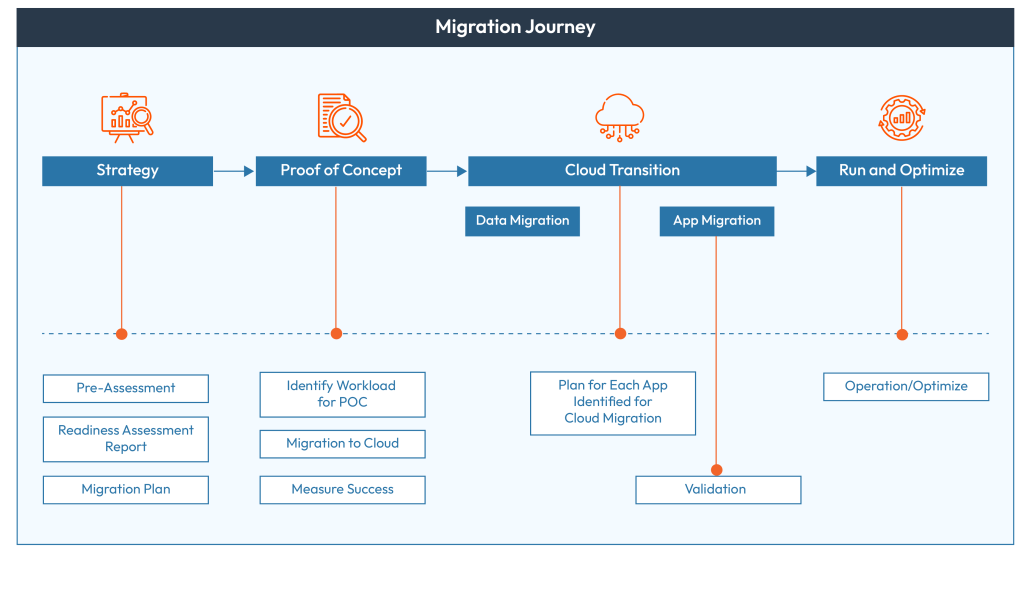

Proof-of-Concept: An approach, usually coming from an experiment for demonstrating immediate business concept, proposal, its design, etc. are feasible.

Q

Query link: QueryLink is a Web-based tool for easy access to Data Warehouse information without knowing a programming language.

Quality Review A review used to assess the quality of a deliverable in terms of fitness for purpose and adherence to defined standards and conventions.

R

Record: A record is an entry in a file, in a non-relational database system, containing of data of each element, which together cater complete details of an element the data required by the system.

Referential Integrity Constraint Rules that specify the correspondence of a foreign key to the primary key of its related table.

Refresh: An approach that gives you an option to update the database objects of the data warehouse with fresh data. This procedure is monitored through the data warehouse management processes and appears on a scheduled basis after the initial load.

Relational Database Management System (RDBMS): A DBMS (database management system) in which data can be seen and manipulated in a tabular form . Data can be sorted in any order and tables of information are easily related or joined to each other.

Relational Online Analytical Processing (ROLAP): OLAP software that employs a relational strategy to organize and store the data in its relationship database.

Reporting Database A database used by reporting applications. Reporting databases are often duplicates of transaction databases used to off-load report processing from transaction databases.

Repository: A tool for storing any facts, figure or info about the system at any point in its life-cycle. This is used for mainly for recovery, extensibility, integrity, etc…

Replication: The process of copying data from one database table to another.

S

Schema: An information model implemented in a database is called Schema. It may be a logical schema, which may not include any optimization. It may be a physical schema that includes optimization or customization.

Scalability: The capability to increase numbers of users and volumes of data to the data warehouse system. This is an important ability for the technical architecture of the cloud data warehouse.

Snowflake Schema: A common form of dimensional model. In this, number of hierarchies in a dimension can be extended into their individual dimensional tables.

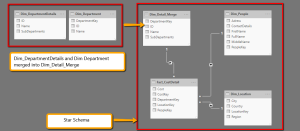

Star Schema: A common form of dimensional model. In a star schema, a single dimension table represents each dimension.

Snapshot: Specifically defines a fact table that denotes the state of affairs at the end of each time period.

SQL (Structured query language): A standard language for creating, modifying, and querying an RDBMS.

Start Schema: A collection of dimensions joined together with a single fact table that is used to construct queries against a data warehouse.

Summarization: The process by which data is summarized to present to DSS or DWH users.

SaaS: Software as a Service allows software to get license on a subscription basis. It is a software licensing and delivery model in which software centrally hosted.

Slice and dice: It is the typical description for data access, equally via any of its dimensions

T

Table: A tabular view of data, on a relational database management system, defined by one or more columns of data and a primary key. A table populated by rows of data.

Tablespace: A logical portion of a database used in allocating storage for table data and table indexes.

Target Database: The storage of the source data, in a data warehouse database object, once it is extracted, transformed and transported.

Transmission Control Protocol/Internet Protocol (TCP/IP): It provides a link to transmit data across the web.

Twinkling Database: In this, the data you are trying to query is not stable. It is constantly changing.

U

Uniform Resource Locator (URL) is the path information in an HTML-coded source file used to locate another document or image.

Usability: That quality of a system that makes it easy to learn, easy to use and encourages the user to regard the system as a positive help in getting the job done.

Unbalanced Hierarchies: An unbalanced hierarchy exists if any branches of the hierarchy descent to different levels. In other words, in an unbalanced hierarchy, not every leaf in the hierarchy has the same level.

User-defined-hierarchy: A hierarchy of attributes, which is used to manage the members of a dimension into hierarchical structures by catering navigation paths in a Cube. For example, take a dimension table that supports three attributes, named Year, Quarter and Months. The Year, Quarter and Month attributes are used to construct a user-defined-hierarchy, named Calendar in the time dimension

V

View: A means of accessing a subset of data in a database

Virtual Warehouse: The view over an operational data warehouse is known as virtual warehouse. It is easy to build a virtual warehouse. Building a virtual warehouse requires excess capacity on operational database servers.

W

World Wide Web: The World Wide Web is a hypermedia application used for access of data over the Internet. The WWW is based on the HTML standard of marking up documents.