The world of data has been growing exponentially, and the data management industry is totally changed from what it was a few years ago. Around 90% of the current data has been generated in the last couple of years only. According to a report by Domo, our continuous data output is nearly 2.5 quintillion bytes in a day, which means there’s massive data generated every minute. With technological transformation, data has become a critical factor in business success. Above all, processing data in the right way has become a pivotal solution for many businesses around the globe.

Terms like data lake, Extract, Transform, Load (ETL), or data warehousing have evolved from being obscure buzzwords to widely accepted industry terminology.

Today, data management technology is growing at a fast pace and providing ample opportunities to organizations. Organizations these days are full of raw data that needs filtering. Systematically arranging the data to get actionable insights for decision-makers is a real challenge. Thus, meaningful data accelerates decision-making, and using ETL tools for data management can be helpful.

Need for Extract, Transform, Load

Data warehouses and ETL tools were created to get actionable insights from all your business data. data is often stored in multiple systems and in various formats, making it difficult to use for analysis and reporting. The ETL process allows for the data to be extracted from various sources, transformed into a consistent format, and loaded into a data warehouse or data lake where it can be easily accessed and analyzed.

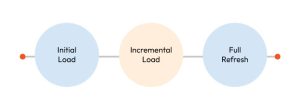

Implementing the ETL Process in the Data Warehouse

The ETL process includes three steps:

- Extract

This step comprises data extraction from the source system into the staging area. Any transformations can be done in the staging area without degrading the performance of the source system. Also, if you copy any corrupted data directly from the source into the database of the data warehouse, restoring could be a challenge. Users can validate extracted data in the staging area before moving it into the data warehouse. The data warehouses should merge systems with hardware, DBMS, OS, and communication protocols. Sources include legacy apps like custom applications, mainframes, POC devices like call switches, ATM, text files, ERP, spreadsheets, data from partners, and vendors. As a result, you need a logical data map before extracting data and loading it physically. The data map represents the connection between sources and target data.

- Transform

The data that is extracted from the source server is incomplete and not usable in its original form. Because of this, you need to cleanse, map, and transform it. This is the most important step where the ETL process enhances and alters data to generate intuitive BI reports. In the second step, you apply a set of functions on the data that you’ve extracted. Data that doesn’t need any transformation is called pass-through data or direct move. Also, you can execute custom operations on data. For example, if a user wants total sales revenue, which is not present in the database, or if the first and last name in a table is in separate columns it’s possible to integrate them in the same column before loading.

- Load

The last step of the ETL process includes loading data into the target database of the data warehouse. In a standard data warehouse, large volumes of data have to be loaded in a comparatively short period. As a result, the loading process needs to be streamlined for performance. If there’s any load failure, one can configure the recovery mechanism to restart from the point of failure without losing data integrity. Admins should monitor, resume, and cancel the load according to the server performance.

The Benefits of ETL for Businesses

There are many reasons to include the ETL process within your organization. Here are some of the key benefits:

Enhanced Business Intelligence

Embracing the ETL process will radically improve the level of accessing your data. It helps you pull up the most relevant datasets while you make a business decision. The business decisions have a direct impact on your operational and strategic tasks and give you an upper hand.

Substantial Return on Investment

Managing massive volumes of data isn’t easy. With the ETL process, you can organize data and make it understandable, without wasting your resources. With its help, you can put all the collected data to quality use and make way for a higher return on investment.

Performance Scalability

With evolving business trends and market dynamics, you need to advance your company’s resources and the technology it uses. With the ETL system, you can add the latest technologies on top of the infrastructure, which simplifies the resulting data processes.

Unlock the Full Potential of Your Data

Every business around the world, whether small, mid-sized, or large, has an extensive amount of data. However, this data is nothing without using a robust process to gather it. Implementing ETL in data warehousing provides a full context of your business for the decision-makers. The process is flexible and agile that allows you to swiftly load data, transform it into meaningful information, and use it to conduct business analysis.