(TLDR: see our ironically written AI-generated summary here)

In The Hitchhiker’s Guide to the Galaxy, a race of hyper-intelligent beings builds a giant super-intelligence to answer the ultimate question of life, the universe, and everything. After millions of years of computation, the machine finally reveals the answer: 42.

A perfect answer to the wrong question.

That moment feels uncomfortably familiar with today’s AI landscape. Every business leader is scrambling for “the answer” with questions like:

Which AI model should we use? How do we reduce costs? How do we get ROI? How do we scale? How do we stay ahead?

Ironically, those unknown questions—the one leaders don’t yet know how to articulate—are often the most critical. They define strategy, risk, value creation, and competitive edge. According to McKinsey’s State of AI 2025 report, while 88% of organizations use AI in at least one function, the vast majority are still stuck in “pilot purgatory,” unable to scale across the enterprise. Furthermore, a 2025 IBM survey reveals that 42% of business leaders cite inadequate financial justification or business cases as a primary barrier, with nearly half worrying about data accuracy.

That’s why there’s so much confusion in the market.

- CEOs feel pressure to adopt AI but can’t pinpoint a clear use case.

- CTOs can deploy models but struggle to operationalize them.

- CFOs want measurable ROI, but the metrics haven’t caught up yet.

- Teams experiment with chatbots while the real value is in automation, orchestration, and agents

But now, we need to shift from looking for answers to asking the right questions.

And that shift is exactly where agentic AI enters a system designed not just to deliver answers, but to help organizations uncover the right questions and turn them into coordinated, measurable action.

To unpack this shift, the rest of the article explores:

- Generative AI vs. Agentic AI: The Distinction That Changes Everything

- Why Leaders Feel Frustrated with AI ROI

- The Core Pain Points Pushing Us Toward AI Agents

- The Hidden Challenges of Building Agentic AI

- What Are the Right AI Questions to Ask?

- The Value of Agentic AI Goes Beyond Autonomy

- TLDR: The AI-Generated Summary

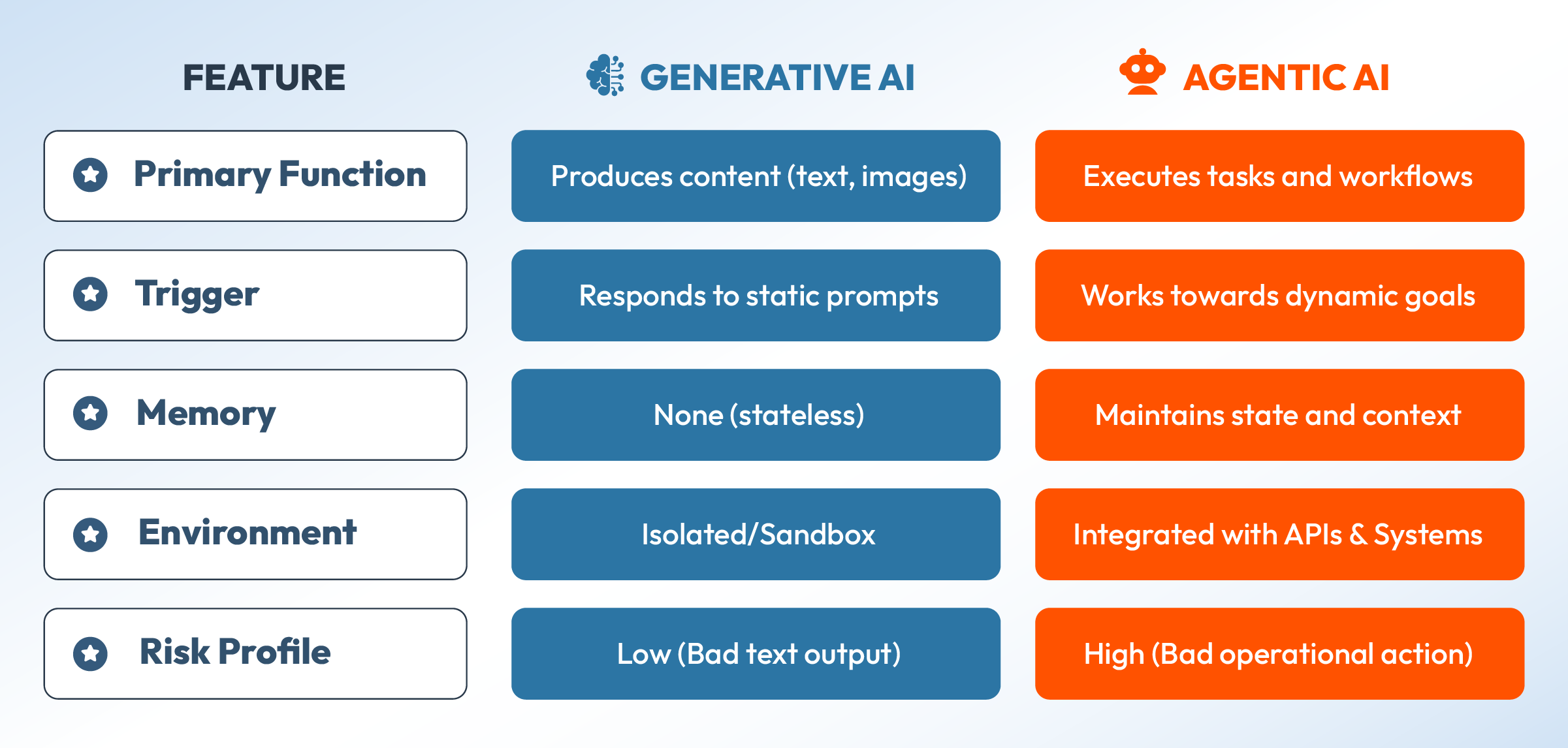

I. Generative AI vs. Agentic AI: The Distinction That Changes Everything

Generative AI, at its simplest, can be understood as an extremely smart search and content creation system; one that doesn’t just fetch information but reshapes it, explains it, and lets us interact with knowledge the way we would with a human tutor.

If we don’t understand a concept, we ask again; if a word confuses us, it breaks it down; if a research paper feels dense, it guides us through it. That’s the power of generative AI.

Now, here’s the interesting part: if an AI can understand concepts well enough to teach a human, then it should also be able to apply those concepts in the real world if a human gives it the right instructions. Humans make decisions by gathering information, checking metrics, estimating probabilities, and understanding what might go right or wrong. And AI can follow the same reasoning pattern. The only thing missing is the ability to take action.

This is where agentic AI comes in. It’s simply generative AI equipped with tools and autonomy, allowing it not just to answer questions but to execute tasks on our behalf.

Generative AI helps us understand things, while agentic AI helps us get things done.

Generative AI (The Writer)

Generative AI is a probability engine. It calculates the next likely word to create text, code, or images. It’s excellent for summaries, drafting, and first-pass analysis.

The limitation? It has no memory, no autonomy, and no understanding of your business rules. It’s passive.

Agentic AI (The Employee)

Agentic AI is the next layer. It moves from answering questions to executing tasks. Whether using API tools, MCP servers, or UI automation, it pursues goals.

The capability? It takes action inside your systems (e.g., “Refund this customer if the policy allows” or “Move this lead to Salesforce”).

The Comparison

Generative AI answers. Agentic AI executes.

Where Do ChatGPT, Gemini, Copilot, Claude, and Perplexity Fit?

A common point of confusion is this:

If generative AI and agentic AI are the two big categories… then where do everyday tools like ChatGPT, Gemini, Copilot, Claude, and Perplexity fit in?

The answer is that they’re neither pure generative AI nor pure agentic AI. They are products; fully packaged experiences built on top of generative models.

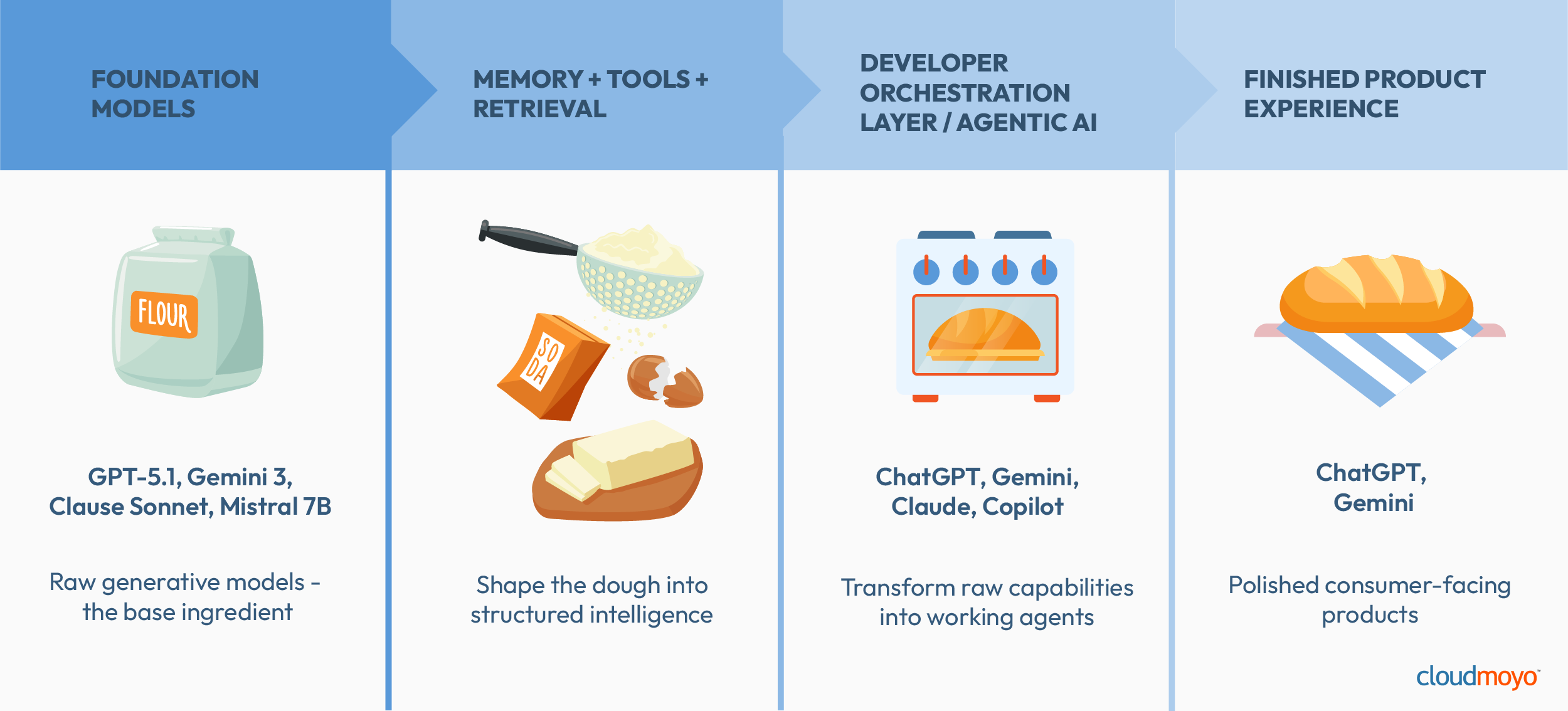

Your developers do not interact with “ChatGPT” (the product) when building enterprise systems. They use raw Foundation Models like GPT-5.1, Claude Sonnet, Gemini 3, or Llama 3. These foundation models are the ingredients, not the finished dish.

To make this distinction clearer, think about baking bread:

- Flour → the foundation model (GPT-5.1, Gemini 3, Claude Sonnet). It’s the core ingredient. Without it, nothing forms.

- Butter, sugar, salt, yeast → memory systems, vector stores, tools, retrieval, and reasoning frameworks. These shape the dough, giving it structure, flavor, and function.

- Oven → the development layer where engineers knead, test, refine, and finally “bake” the system into something stable and usable.

- The finished warm loaf served → ChatGPT, Copilot, Claude, Gemini, and similar tools; a user-facing product built on top of many hidden layers of engineering, tooling, and orchestration.

So, when we talk about generative AI vs agentic AI in an enterprise context, we’re not talking about the consumer product you interact with daily. We’re talking about the underlying technical ingredients that developers use to build copilots, automation systems, reasoning pipelines, and eventually autonomous agents

In other words:

ChatGPT isn’t “generative AI.” ChatGPT is what you get when developers take generative AI, surround it with memory and tools, orchestrate it in an “oven,” and present it as a polished product.

This is why the distinction matters. Businesses don’t deploy ChatGPT. They deploy systems built from the same underlying ingredients used to build ChatGPT.

Once AI takes action, the technical complexity multiplies. You’re no longer experimenting with a chatbot. You’re enabling autonomous decision-making inside your revenue engine.

II. Why Leaders Feel Frustrated with AI ROI

Now that we’ve established what generative and agentic AI are/are not, here’s our next question.

If AI is so powerful, then why are the returns so mixed?

In a 2025 Gartner poll, nearly half of executives reported that their AI initiatives delivered ROI below expectations. The hurdles are rarely just about “better algorithms.” They’re about people, processes, and data.

Here’s where the data is pointing to:

- The People Gap Shift: It’s no longer just about hiring data scientists. It’s about hiring people who understand AI governance. Nearly half of CDAOs cite lack of skills as the top barrier to success across both generative and traditional AI.

- Process Readiness Gap: Most organizations aren’t blocked by technology; they’re blocked by how work is processed. People don’t know how to embed AI into their day-to-day processes, so the work stays manual and repetitive.

- Lack of Data Readiness is the Silent Killer: You cannot build an intelligent agent on dumb data. IBM’s 2025 adoption survey highlights that 45% of leaders are stalled by concerns over data accuracy and bias. If your data fields are missing or inconsistent, an agent won’t just give you a bad summary; it will execute a bad transaction.

- The “Agent Washing” Trap: Organizations must guard against “agent washing,” meaning vendors simply relabeling basic software as “AI Agents.” Gartner analyst Anushree Verma noted in 2025 that out of thousands of supposed Agentic AI products, only ~130 truly have advanced autonomous capabilities.

These frustrations are pushing leaders toward something more capable than a chatbot. They need systems that do, not just say.

III. The Core Pain Points Pushing Us Towards AI Agents

So, why are companies risking this complexity? It’s because the current way of working is breaking down.

- Operational Drowning: Teams are buried in micro-decisions approvals, reconciliations, and compliance checks. Generative AI can’t click “approve” in SAP; agents can.

- Complex Workflows: Real business isn’t linear. It’s a tree of “If-This-Then-That” exceptions. Traditional RPA (Robotic Process Automation) is too rigid, breaking whenever a button moves. Agentic AI is flexible enough to handle the “Unless condition B” scenarios.

- Scale: Analysts project that by 2028, at least 15% of day-to-day work decisions will be made autonomously by agents. No company can hire enough humans to manually oversee that volume of work.

- Cost: Economics are forcing the shift. In 2025 surveys, 57 % of organizations adopting AI agents reported measurable cost savings, particularly through automation of repetitive tasks and faster decision-making. Additionally, analysts predict that autonomous agentic AI could cut up to about 30 % of operational costs in functions like customer service by 2029.

IV. The Hidden Challenges of Building Agentic AI

When you transition from a “Chatbot” to an “Agent,” you need a new Software Development Life Cycle (SDLC). Leaders who skip this step walk right into what I call the “Agent Spiral.”

Challenge 1: Agent Sprawl (The New API Bloat)

Without governance, organizations rapidly accumulate hundreds of single-purpose agents. This creates a “shadow workforce” that is unmanaged, unduplicated, and impossible to audit.

Challenge 2: The “GOTO” Problem (Agents Calling Agents)

What happens when the Sales Agent asks the Inventory Agent for stock, but the Inventory Agent triggers the Procurement Agent? You get opaque execution flows and potential infinite loops. If something breaks, tracing the failure path becomes a nightmare.

Challenge 3: Testing the Untestable

Traditional QA fails here. You can’t just test inputs and outputs because agents behave dynamically. Evaluation in 2025 requires simulation environments and synthetic data to test “trajectories,” not just answers.

Challenge 4: The Data Governance Burden

Tools are deterministic; agents are probabilistic. This blurring of boundaries creates massive risk. One wrong decision by an autonomous agent can result in financial loss or compliance violations. Gartner predicts 40% of agentic AI projects will be canceled by 2027, specifically due to inadequate risk controls and unclear ROI.

V. What Are the Right AI Questions to Ask?

Throughout this article, one theme has kept resurfacing:

AI doesn’t break because of the answers it gives—it breaks because of the questions we ask. The Hitchhiker’s paradox wasn’t that “42” was wrong. It was that nobody knew what they were solving for. Most companies today are in the same boat.

The questions that actually move an organization from “AI curiosity” to “AI advantage” start with clarity; not on the model, not on the cost, not on the hype, but on the business problem worth solving.

Questions to Ask: Is AI just a line item…or a competitive advantage?

Here’s your North Star Question:

“What business decision or workflow, if improved by 10x, would meaningfully change our company’s trajectory?”

But below are more questions to consider.

1. What problem in my business is worth automating or augmenting?

Not everything needs an agent. Not everything deserves automation. Start with:

-

- Repetitive workflows

- High-volume decision points

- Predictable rules

- Areas with measurable outcomes

2. Do we have the data maturity required to support an intelligent agent?

An agent is only as smart as the data feeding it. The right questions:

-

- Is our data complete?

- Is it accurate?

- Does the system know our business rules?

- Could an autonomous decision cause risk if the data is wrong?

If the answers are shaky, the agent will be shakier.

3. Do we have the data maturity required to support an intelligent agent?

This defines autonomy levels, guardrails, risk boundaries, and escalation paths. This is where AI governance becomes non-negotiable.

4. What KPIs define success for our AI initiative?

“Let’s try AI and see what happens” is not a strategy. Leaders should define:

-

- Operational efficiency gains

- Cost reduction

- Improved cycle time

- Increased throughput

- Reduced error rate

AI ROI becomes measurable only when success becomes measurable.

5. How will these AI systems integrate into our existing workflows and systems?

Agentic AI doesn’t live in a vacuum. It must plug into the CRM, ERP, data lake, policy engines, APIs, and audit logs.

6. Who will own AI governance, risk, maintenance, and evaluation?

AI isn’t a project; it’s a capability. The right question is: who is accountable for the health, behavior, and continuous improvement of our agents? Without ownership, agent sprawl happens fast.

Once leaders start asking these kinds of questions, the fog around AI clears. The conversation shifts from “Which model should we use?” to something sharper, more strategic, and grounded in reality. From there, everything else falls into place.

VI. The Value of Agentic AI Goes Beyond Autonomy

Agentic AI is synonymous with autonomy, but its value lies beyond its ability to take action. Autonomy doesn’t equal value unless the agent is solving the right problem effectively. Companies must approach agentic AI with clear success criteria and, more importantly, strong oversight.

CloudMoyo helps enterprises build the next generation of AI capabilities. We have proven experience delivering generative AI solutions ranging from intelligent copilots to automated document and enterprise data workflows.

Building on these generative AI use cases, we also support organizations as they move toward agentic AI. Our teams work with tools like Fabric to strengthen data foundations, advanced LLMs like Azure OpenAI to provide reasoning and decision-making, and orchestration frameworks like LangChain and Autogen to bring multiple AI components together.

With an agile approach, CloudMoyo can create intelligent systems that plan, make decisions, and take action across business processes across industries. Our goal is to help enterprises move confidently from automation to autonomy, while following the best industry-practices while doing so—especially in terms of information security.

While your teams chill with our PDO (Paw-ductivity Officer)… we’ll handle your agents’ tantrums.

VII. TLDR: The AI-Generated Summary

300-Word Summary

In Beyond the Chatbot: Why Leaders Are Pivoting to Agentic AI in 2025, the article argues that the biggest failure in today’s AI adoption isn’t technological—it’s conceptual. Drawing inspiration from The Hitchhiker’s Guide to the Galaxy, it highlights a familiar paradox: organizations are getting increasingly sophisticated AI answers without first asking the right questions.

Most enterprises today are stuck in what surveys call “pilot purgatory.” While generative AI tools are widely adopted, they often remain confined to chatbots, summaries, and experimentation. Leaders feel pressure to “do something with AI,” yet struggle to translate pilots into measurable business impact. CEOs want strategic advantage, CTOs can deploy models but not scale them, and CFOs demand ROI metrics that rarely exist.

The article clarifies a critical distinction: generative AI explains, agentic AI executes. Generative AI acts like a skilled writer or analyst—excellent at producing content but fundamentally passive. Agentic AI, by contrast, introduces autonomy, memory, and tool integration, allowing systems to take actions within real business workflows. This shift—from answers to execution—is what unlocks enterprise value, but it also multiplies risk and complexity.

Moving toward Agentic AI exposes new challenges: poor data quality, “agent washing” by vendors, governance gaps, talent shortages, and operational risks when probabilistic systems take real-world actions. Without proper guardrails, organizations face agent sprawl, opaque decision paths, and untestable behaviors.

Ultimately, the article reframes the AI conversation around one central idea: AI fails not because of bad answers, but because of poorly framed questions. The right starting point isn’t “Which model should we use?” but “Which business decision, if improved 10x, would meaningfully change our trajectory?” When leaders focus on clarity, governance, and intentional autonomy, AI stops being a demo—and starts becoming a durable competitive advantage.

500-Word Summary

This article makes a clear and timely case: the next evolution of enterprise AI is not about better chatbots, larger models, or faster responses—it’s about learning to ask better questions and enabling systems that can act on them.

Using The Hitchhiker’s Guide to the Galaxy as a framing metaphor, the author draws a parallel between today’s AI landscape and the infamous answer “42”: technically correct, yet strategically useless without the right question. Business leaders today face a similar dilemma. They ask about models, costs, and scale, while overlooking the deeper issue—what decisions or workflows AI should actually improve.

Despite widespread AI adoption, most organizations remain stuck in pilots. Industry data from McKinsey, IBM, and Gartner shows that while AI usage is high, enterprise-wide impact is limited. The reasons are consistent: unclear business cases, insufficient data readiness, weak governance, and a focus on experimentation over execution.

The article introduces a foundational distinction between generative AI and agentic AI. Generative AI excels at producing content, explanations, and first-pass analysis, but remains passive and stateless. Agentic AI builds on these models by adding memory, tools, autonomy, and integration with enterprise systems—allowing AI to plan, decide, and act. In simple terms, generative AI helps organizations think; Agentic AI helps them operate.

This shift, however, comes with real risks. Once AI systems begin executing tasks—approving transactions, moving data, triggering workflows—the technical and governance complexity increases dramatically. The article highlights several hidden challenges: agent sprawl, opaque agent-to-agent interactions, the difficulty of testing non-deterministic systems, and heightened compliance and audit risks. Without clear ownership and guardrails, agentic systems can quickly become liabilities rather than assets.

At the core of the article is a reframing of leadership responsibility. AI initiatives fail not because leaders are careless, but because the right questions were never obvious. The author proposes a new north star: Which business decision or workflow, if improved by 10x, would meaningfully change the company’s trajectory? From there, leaders must evaluate data readiness, define autonomy boundaries, establish KPIs, integrate with existing systems, and assign governance accountability.

The conclusion is clear and pragmatic. Agentic AI delivers value not through autonomy alone, but through intentional, constrained, and measurable action. When organizations align AI initiatives with real business problems, strong data foundations, and disciplined governance, AI moves beyond demos and hype. It becomes operational infrastructure—and a true competitive differentiator.

References

- The State of AI: Global Survey 2025 | McKinsey

- https://www.ibm.com/think/insights/ai-adoption-challenges

- https://www.gartner.com/en/newsroom/press-releases/2025-06-25-gartner-predicts-over-40-percent-of-agentic-ai-projects-will-be-canceled-by-end-of-2027

- https://platform.openai.com/docs

- https://deepmind.google/technologies/gemini/

- https://learn.microsoft.com/en-us/copilot/

- Designing a Successful Agentic AI System

- https://www.kaggle.com/whitepaper-introduction-to-agents

- Agentic AI – the new frontier in GenAI

- Agentic AI Is Rising And Will Reforge Businesses That Embrace It

- CEO strategies for leading in the age of agentic AI | McKinsey

- ebook_mit-cio-generative-ai-report.pdf

Disclaimer: Humans led the thinking. Research and writing were supported by OpenAI, Google Gemini, Perplexity, and an unreasonable number of browser tabs. No AI was harmed in the making of this article. Nor did humans edit the TLDR summary…

Mohit Chhajed

Mohit Chhajed is an AI/ML Engineer at CloudMoyo. He has earned his Bachelor's degree in Electronics and Communications Engineering, as well as a Masters of Science in in Data Analytics and Engineering from Northeastern University. Mohit is proficient in spearheading Business Intelligence and Advanced Analytics initiatives and brings a balanced leadership style that emphasizes both results and fostering a collaborative environment. His commitment to continuous learning and knowledge sharing ensures the development of innovative solutions while nurturing a culture of growth.